Evaluation and adaptive learning at work: Insights from RF MERL Mali

Rapid Feedback Monitoring, Evaluation, Research and Learning (RF MERL) is a USAID Bureau for Planning, Learning, and Resource Management initiative that co-designs rigorous-yet-flexible approaches to generating timely and useful data for USAID and its partners. The Results for Development (R4D)-led RF MERL consortium has partnered with USAID/Mali to support two Integrated Health Project (IHP) programs. The first, Keneya Sinsi Wale (KSW), provides support at the regional level to strengthen health systems, financing, and governance, while the second, Keneya Nieta (KN), works at the village level to improve sustainable delivery of community-based essential health services. This blog shares key insights from interviews with the RF MERL/Mali team and implementing partners.

Impact evaluations and other traditional evidence generation methods have been an essential part of the international development evaluation space for decades. Yet there’s an appetite in this area for adaptive learning approaches, which take a more flexible approach to evaluation methodologies and emphasize locally led research and earlier decision-making. Funders and local partners know that both strands of inquiry are valuable, but they have questions about how traditional and innovative methods can work together.

Over the past 10 years, RF MERL has experimented with ways to carry out learning and decision-focused activities alongside traditional impact evaluations. We often hear the following questions when discussing the RF MERL approach:

- Is it possible to utilize both an impact evaluation and adaptive learning?

- Why is adaptive learning beneficial?

- When is it best to add earlier-stage feedback loops to an impact evaluation?

- How do I carry out adaptive learning and an impact evaluation simultaneously?

- How can I look at the project holistically, rather than as two separate workstreams?

Over time, we’ve seen that these two distinct approaches can reinforce and complement each other. In our work on RF MERL/Mali, we designed a classically rigorous impact evaluation that works in tandem with a series of adaptive learning activities to build a stronger program in the long-term.

Using RF MERL/Mali as a case study, here are several key insights on how to utilize — and combine — these two approaches.

You don’t have to choose between an impact evaluation and adaptive learning methods.

Adaptive learning reinforces — and does not preclude — a later impact evaluation.

In fact, adding adaptive learning activities to our impact evaluation in Mali created learning outcomes that were greater than the sums of their parts.

Impact evaluations and adaptive learning activities often operate as standalone engagements. Impact evaluations provide a retrospective analysis of what has occurred during a program’s implementation; they do not engage with the day-to-day decision making that is required during delivery. Adaptive learning activities, on the other hand, focus on learning about and improving frontline issues during a project. Their sharp focus and short timeline, however, means that the adaptive learning activities will not provide conclusive evidence on long-term program impact.

Measuring program impact typically involves introducing a single intervention and collecting data over a set period to ascertain if it achieved its intended effect. In a traditional impact evaluation, introducing additional activities such as adaptive learning exercises might cause researchers to lose the ability to identify which intervention caused impact. We argue that this adaptive management is a core component of strong program implementation that seeks only to improve program implementation and performance (take-up, adherence, sustainability, etc.), not the nature of the activity or interventions. RF MERL supports adaptive management teams by promoting evidence-based decision-making and strengthening existing program evidence rather than introducing a confounding variable.

Blending an impact evaluation with adaptive learning provides learning that can be embedded into long-term program work with outcomes measured by the impact evaluation. If they are effective, adaptive learning activities can accelerate successful program implementation, leading to a greater program impact.

“I think the two approaches are complementary because together, the work that we’re doing here will go beyond the life of the project itself. The stakeholders that we engage in [adaptive learning activities] are members of the community. Now that the community is engaged in our work, it will have impact long after we’re finished.”

–Birama Djan Diakite, KSW team member

Adaptive learning activities can help to interrogate early-stage outcomes in a long causal chain.

USAID/Mali’s Integrated Health Project uses funding for local governance initiatives to improve health outcomes. This causal chain – the sequence of intermediate outcomes that must be achieved to attain program impact – is long. It would take many years for an impact evaluation to pick up on whether changes to local government financing produced an impact on community health. Adding adaptive learning work to the impact evaluation design allowed the team to figure out where there might be breakdowns early in the casual chain and test how to resolve them.

“We know that the causal chain between governance and health outcomes is very long and not always straightforward, but impact evaluations are still important to discover program outcomes. Adaptive learning activities help partners to involve local stakeholders and improve implementation to support these program outcomes.”

–Dr. Eric Djimeu Wouabe, RF MERL/Mali principal investigator

Trust in local governance is one potential area that could limit program impact early in the causal chain. If community members do not believe that local officials are working in the community’s best interest, they are less likely to attend any health-related community events that the official organizes. This trust is difficult to measure and build but essential for improved health outcomes. RF MERL worked with KN and KSW in adaptive learning activities to engage communities in local health governance through community meetings and awareness campaigns.

As a result of these adaptive learning activities, RF MERL saw increased community engagement — through responsiveness to awareness campaigns and participation health-focused community meetings — in local health governance. KN and KSW will integrate adaptive learning activity findings in their current workplans, which may contribute to the programs’ overall effectiveness.

Pursuing adaptive learning alongside an impact evaluation requires deep partnership and a heightened attention to timing.

Strong collaboration between implementing partners, the MEL partner and funders is essential.

Adaptive learning activities, which focus on each program’s unique frontline needs, required that KN, KSW and USAID/Mali team members engaged deeply and regularly with the RF MERL team. This can present a challenge for partners that are already occupied with their program activities, yet collaborating with RF MERL gave KN and KSW team members an impetus to pause, reflect on and learn from their program implementation in a manner that that would have otherwise been impossible because of their limited bandwidth and resources.

“The collaboration with RF MERL around the [adaptive learning activities] was very useful because we were having discussions that probably the project wouldn’t have otherwise had. It helps KN to really reinforce and make the activities that we are implementing better by using the additional information that is provided through the [adaptive learning] exercises.”

–Dr. Eckhard Kleinau, KN team member

Building relationships with partners during the adaptive learning activities also strengthens trust for continued collaboration during the impact evaluation. The frequent opacity between implementing partners, MEL partners and funders during a traditional impact evaluation can cause tension when projects inevitably encounter obstacles. When all partners have previously worked together during adaptive learning design and implementation, however, pre-established communication channels allow for honesty and collaborative problem-solving if obstacles arise.

Managing project timelines is essential.

Impact evaluations and adaptive learning activities work well together because they supplant each other’s weaknesses: impact evaluations indicate whether a program worked but does not indicate why, while adaptive learning activities strengthen design without detailing overall program effectiveness. To learn as you go and identify a program’s results, adaptive learning implementation and data collection must be precisely sequenced.

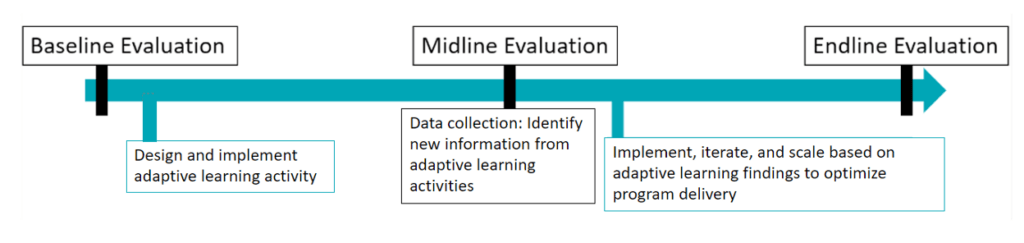

RF MERL/Mali Timeline, as originally designed:

RF MERL used the annual data collection effort for the impact evaluation to collect additional data for adaptive learning activity analysis. This sequencing allowed the MEL team to understand the quantitative impact of adaptive learning activities and conduct qualitative key informant interviews for the impact evaluation. The parallel timeline maximizes learning while using resources efficiently.

It can be difficult to synchronize adaptive learning activity implementation, implementing partners’ workplans and data collection. Adaptive learning activities require extensive participation from all partners in a project, external government or institutional review board approval and community engagement, all of which can be time consuming. On-the-ground attention from a study coordinator is particularly important in this dual-methodology context to make sure that all aspects of the project are running smoothly and on time.

RF MERL is continuing to explore how methodologies work together.

Our work with RF MERL/Mali provides a compelling example of how adaptive learning exercises can support traditional impact evaluation. This two-pronged methodological approach was chosen because the RF MERL team is committed to thinking creatively about how to best use the methodological tools at our disposal.

The monitoring, evaluation, research and learning field is constantly experimenting with traditional and nontraditional approaches to our work. RF MERL is committed to this exploration, and we know that it is critical to find ways to combine different methods in a manner that enhances overall learning outcomes.